A quick look at Azure Logic Apps Enterprise Integration Tools

Azure Logic Apps allows you to quickly build integration solutions using a visual designer to define the flow of the data between various cloud and on-premise data connectors. As I started to use logic apps it seem at first as if JSON was the first choice in terms of content types, but XML and integration around XML is supported using the latest Azure Logic Apps Enterprise Integration Tools.

Moving XML EDI and Integration into the cloud

Core to what I was interested in was having

an endpoint provisioned to automatically receive an

HTTP request with minimal effort. My next requirement was that I should be able to

validate the XML against a known schema - we want to validate data before going to far. If I passed that step I wanted to either process the message or put it on the queue. Lastly, I wanted to end the workflow with a response indicating the status.

This is how it looks at a high level:

The example is trivial but meets the requirement and gives a good idea of what the Logic Apps and Enterprise Integration Tools are capable of and where it may go in the future. Support for EDI and EAI via file and XML using standard industry protocols is available. I won't delve into the specifics of message formats but the flow below should be suitable as a starting point for implementing more advanced message handling.

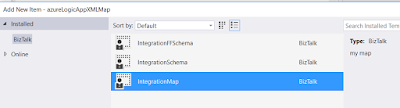

Using these Enterprise Integration Tools allows you to define BizTalk

schema which can be used for transforming data once that data is

received via a trigger. It is really impressive to see how quickly you

can define the Http Request as a trigger, validate and transform the

data using the Logic Apps designer as well as the Enterprise Integration

Tools in Visual Studio 2015. Let us take a quick look at getting

started.

Getting started

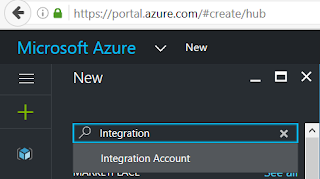

Log in to Azure and create a new Logic App if you have not already done so.

The follow-up posts will help to break this into more detail but for now you will also need

- An Azure function container and Azure function to run the transform. This is the serverless infrastructure, a hot topic at the moment. Imaging running just a function in the cloud, without worrying about a full API to support it, nor having to worry about the deployment and management of the infrastructure.

- An Azure Integration account configured and linked to the Logic App

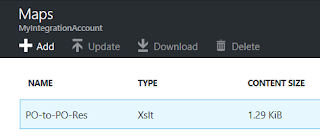

- Specific agreements, partners, schema and maps to transform the XML

- Postman or curl to POST the requests to trigger the Logic App

With all that in place, we can start with linking a few actions together as shown earlier.

The first action is the HTTP Request, the trigger which will start this workflow when an HTTP endpoint receives a message.

HTTP Request

The Request Action mentions JSON which may through you off, but it will work perfectly fine with XML. As soon as you save the Logic App the URL should be provided. We'll use this to post our first message.

XML Validation

I've set up some schema which we will look at later, for now it is sufficient to know that the Body comes from the trigger (the HTTP Request Body) and I want to validate it against a purchase order schema (.xsd) file. If that fails the response will return immediately with an error.

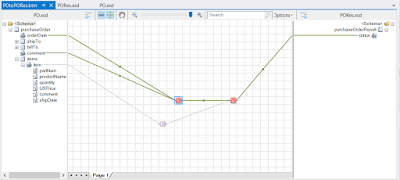

XML Transformation

Create a Transform action, and configure it as necessary.

In the next post I'll show more detail on how I set this up. The goal here is to run the transform via an Azure function using a specified schema and Map to transform my demo purchase order to a response.

HTTP Response

Should the Transform succeed, I want to return the transform to the caller. Trivial, but the point is to validate the possibility. I will take the output of the previous action for the response body:

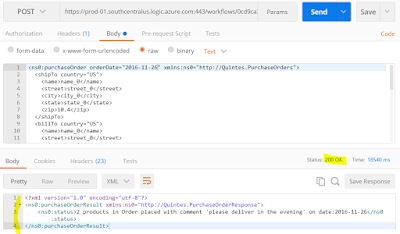

Let's try it out by making a POST via postman

The response is possibly not the best message, and it may actually need to be XML so we'll solve that another time.

Let us take a look at the Logic App and see if we can figure out where it went wrong. Go to the Logic App Overview blade, and look at the most recent error in

All Runs. Here it is quite clear I couldn't pass my XML schema validation check, so I can check that off successfully.

Of course as the workflow becomes richer we can take decisions and actions based on these outputs.

If we sent a proper message what would happen then?

The response indicates a 200, that's perfect for getting started, so how did the run look?

So all actions passed and the response returned 200 OK, the response body actually contains data which came out of the Transform step:

Summary

This four step workflow is a quick proof of concept on how we could

move our XML messaging capability to Azure Logic Apps and use various

other Azure Services to build sophisticated solutions to Enterprise

Integration.

I will follow up with more posts on the specifics of setting this up and seeing how the Logic Apps Enterprise Integration Tools can be used to define the transform. Hopefully this helps in starting to think about Enterprise Integration challenges and their solutions in the cloud.

Update -

Part 2 here

Update -

Part 3 here

@quintes